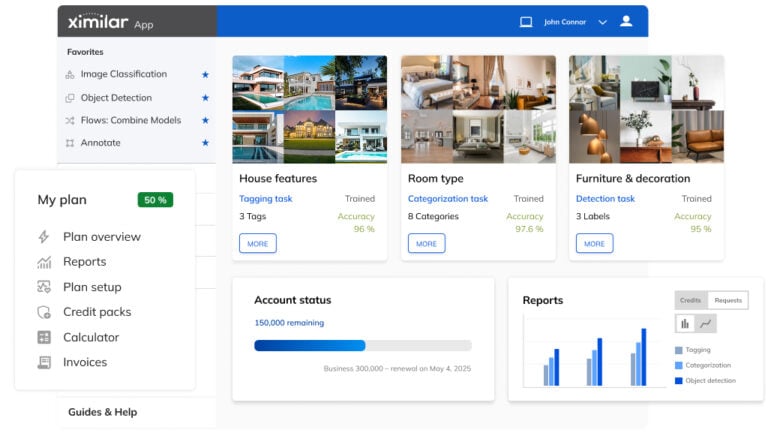

Ximilar offers powerful and easy-to-use image recognition and classification service using deep neural networks. With Ximilar App, you can train your own custom model for image recognition, online and for free. Working with custom data comes with the responsibility of collecting the right dataset. A good dataset is crucial in achieving the highest possible accuracy. Let’s break down some rules for those who are building datasets.

So what are the steps when preparing the dataset?

1. Plan and simplify

In the beginning, we must think about how the computer sees the images. It is important to understand the environment, type of camera or lighting conditions. Want to use the API in a mobile camera? Aim to collect images captured by mobile phone, so they match with future images. Analysing medical images? You can get images from the same point of view, and the neural network learns nuanced patterns. Do you want to analyse many features (e.g., “contains glass” and “is the image blurry”)? Set up more models for each of the features. Don’t mix it up all in one. 😉

If you are not sure, ask the support. They can provide educated advice.

2. Collect images

For all the tasks, try to get the most variable and diverse training dataset. Here are some tips:

- get images from different angles

- change lighting conditions

- take images with good quality and in focus

- change object size and distance/zoom

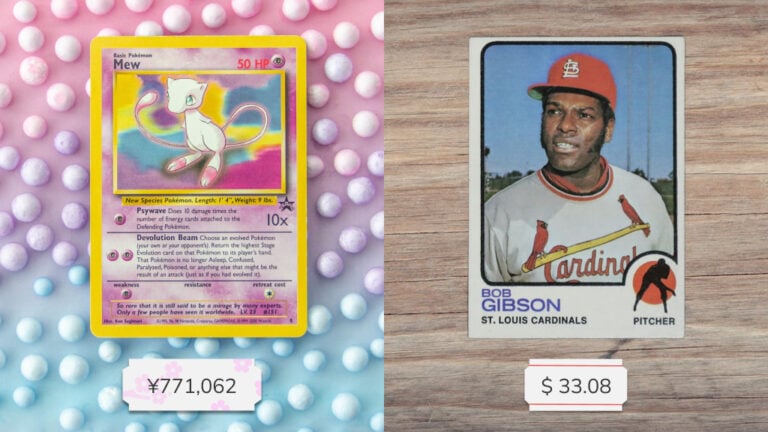

This is especially true for cases when you want to recognise real-world objects. They always vary a lot in their background, image quality, lighting etc. Take this into account and try to create as a realistic dataset as possible. Realistic in the way of how you are going to use the model in future. Training with amazing images and deployment with low-res blurry images won’t deliver a good performance. Don’t worry if you can get enough images, our platform can augment images and generate more data during the training of the machine learning model.

When working with coloured objects, make sure your dataset consists of different colours.

Higher diversity of the dataset leads to higher accuracy.

With Ximilar (vize.ai) the training minimum is as little as 20 images, and you can still achieve great results. However, for more complex and nuanced categories you should think about 50, 100 or even more images for training. You can test with 20 images to understand the accuracy and then add more.

Sometimes it might be tempting to use stock images or images from Bing or Google Search. These will work too, mostly for simple tasks. However, you might hinder the accuracy. There is also a lot of professional image-gathering services that can help you.

3. Sort and upload data

You have your images ready, and it’s time to sort them. When you have only a few categories, you can upload all the images into the mixed zone and label them in our app. For big datasets, it is best to separate training images into different folders and upload them directly to each of the categories in our app.

Make the dataset as clean as possible. Skip images that might confuse you. If you are not sure about the category of a particular image, do not use it.

Think about structure once again. Many times you have more tasks you want to achieve, but you put it all in one and create overlapping categories. For such cases, it is good to create more tasks, where each is trained for a feature you want to recognise.

You can upload images via our front end with a drag-and-drop feature or use our REST API. You can set part of your dataset only for testing, in this way, you can get evaluation numbers (accuracy, precision, recall) that are independent of your training dataset.

More on processing & chaining multiple AI models in the blog post about Flows.

4. Train and test your model

Now comes the exciting part! Training your own neural network and seeing the results. When you send the task to training, we split your dataset into training and testing images. This way, we can evaluate the accuracy of your model.

If you’re happy with the accuracy, you’re just a few lines of code from implementation into your app.

If you want to achieve higher accuracy, you can add more data, fix mislabelled data and retrain the model again. Ximilar App cloud platform keeps the last 5 trained models per task, so you will not lose your previously trained model.