How to Deploy Models to Mobile & IoT For Offline Use

Tutorial for deploying Image Recognition models trained with TensorFlow to your smartphone and edge devices.

Did you know that the number of IoT devices is crossing 38 billion in 2020? That is a big number. Roughly half of those are connected to the Internet. That is quite a large load for internet infrastructure, even for 5G networks. And still, some countries in the world don’t yet adopt 4G. So internet connectivity can be slow in many cases.

Earlier, in a separate blog post, we mentioned that one day you will be able to download your trained models offline. The time is now. We worked for several months with the newest TensorFlow 2+ (KUDOS to the TF team!), rewriting our internal system from scratch, so your trained models can finally be deployed offline.

Tadaaa — that makes Ximilar one of the first machine learning platform that allows its users to train a custom image recognition model with just a few clicks and download it for offline usage!

The feature is active only in custom pricing plans. If you would like to download and use your models offline, please let us know at [email protected], where we are ready to discuss potential options with you.

Let’s get started!

Let’s have a look at how to use your trained model directly on your server, mobile phone, IoT device, or any other edge device. The downloaded model can be run on iOS devices, Android phones, Coral, NVIDIA Jetson, Raspberry Pi, and many others. This makes sense, especially in case your device is offline – if it’s connected to the internet, you can query our API to get results from your latest trained model.

Why offline usage?

Privacy, network connectivity, security, and high latency are common concerns that all customers have. Online use can also become a bottleneck when adopting machine learning on a very large scale or in factories for visual quality control. Here are some scenarios to consider offline models:

- You don’t want your data to leave your private network.

- Your device cannot be connected to the Internet or the connectivity is slow.

- You don’t need to request our API from your mobile for every image you make.

- You don’t want to be dependent on our infrastructure (but, BTW, we have almost 100% uptime).

- You need to do numerous queries (tens of millions) per day and want to run your models on your GPU cards.

Right now, both recognition models and detection models are ready for offline usage!

Before continuing with this article, you should already know how to create your Recognition models.

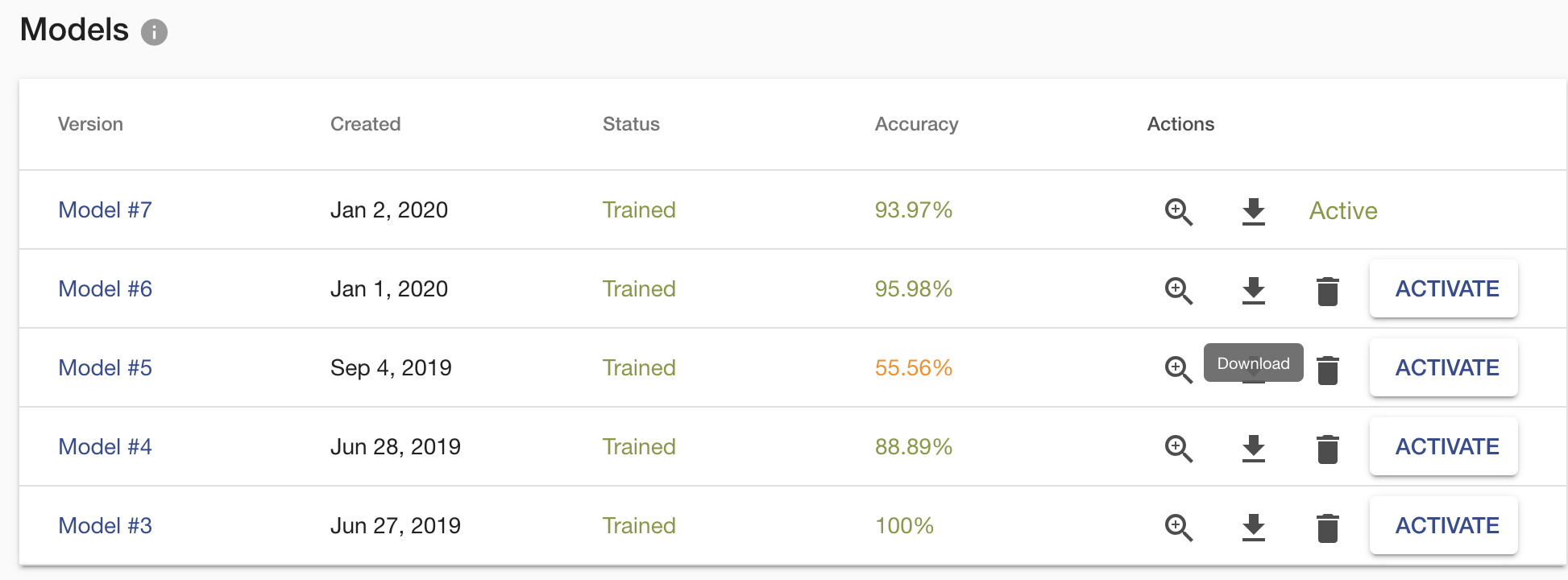

Download

After creating and training your Task, go to the Task page. Once you have permission to download the model, scroll down to the list of trained models and you should see the download icons. Choose the version of the model you are satisfied with and use the icon to download a ZIP file.

This ZIP archive contains several files. The actual model file is located in the tflite folder, and it is in TFLITE format which can be easily deployed on any edge device. Another essential file is labels.txt, which contains the names of your task labels. Order of the names is important as it corresponds with the order of model outputs, so don’t mix them up. The default input size of the model has a 224×224 resolution. There is another folder with saved_model which is used when deploying on server/pc with GPU.

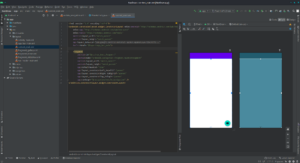

Deploy on Android

That’s it!

Remember this is just a sample code on how to load the model and use it with your mobile camera. You can adjust/use code in a way you need.

Deploy on iOS

With iOS, you have two options. You can use either Objective-C or Swift language. See an example application for iOS. It is implemented in the Swift language. If you are a developer then I recommend being inspired by this file on GitHub. It loads and calls the model. The official quick start guide for iOS from the TensorFlow team is on tensorflow.org.

Workstation/PC/Server

If you want to deploy the recognition model on your server, then start with Ximilar-com/models repository. The folder scripts/recognition contains everything for the successful deployment of the model on your computer. You need to install TensorFlow with version 2.2+. If your workstation has an NVIDIA GPU, you can run the model on it. The GPU needs to have at least 4 GB of memory and CUDA Compute Capability 3.7+. Inferencing on GPU will increase the speed of prediction several times. You can play with the batch size of your samples, which we recommend when using GPU.

Edge and Embedded Devices

There is also the option to deploy on Coral, NVIDIA Jetson, or directly to a Web browser. Personally, we have a great experience on small projects with Jetson Nano. The MobilenetV2 architecture converted to TensorFlow LITE models works great. If you need to do object detection, tracking and counting then we recommend using YOLO architectures converted to TensorRT. YOLO can run on Jetson Nano in real-time settings and is fantastic for factories, assembly lines and conveyor belts with a small number of product types. You can easily buy and set up a camera on Jetson. Luckily, we are able to develop such models for you and your projects.

Update 2021/2022: We developed an object and image recognition system for Nvidia Jetson Nano for conveyor belts and factories. Read more at our blog post how to create visual AI system for Jetson.

Summary

Now you have another reason to use the Ximilar platform. Of course, by using offline models, you cannot use the Ximilar Flows which is able to connect your tasks to form a complex computer vision system. Otherwise, you can do with your model whatever you want.

To learn more about TFLITE format, see the tflite guide by the TensorFlow team. Big thanks to them!

If you would like to download your model for offline usage, then contact us at [email protected] and our sales team will discuss a suitable pricing model for you.

Tags & Themes

Related Articles

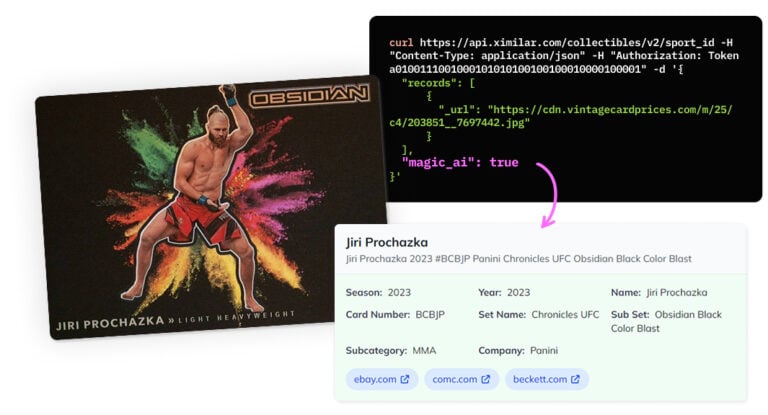

Recognize New & Rare Cards With AI Sports Card Identification

With millions of cards and variations, even the best databases miss some. We refined our sports cards recognition to identify cards even when no match exists.

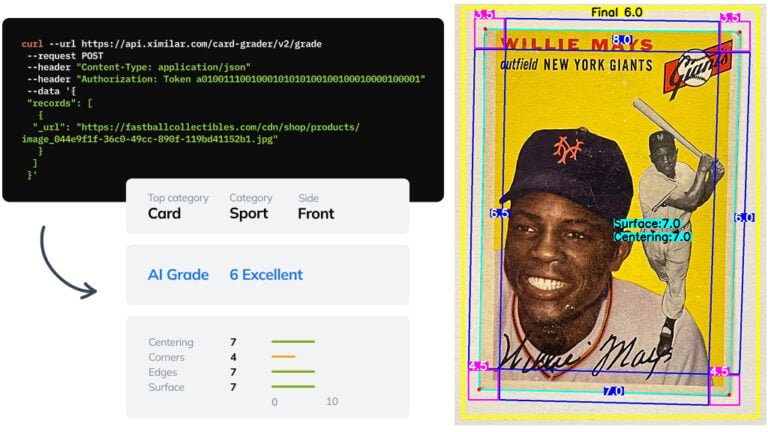

Automate Card Grading With AI via API – Step by Step

A guide on how to easily connect to our trading card grading and condition evaluation AI via API.

How to Automate Pricing of Cards & Comics via API

A step-by-step guide on how to easily get listings of collectibles, such as comic books, manga, trading card games & sports cards.