How Does Machine Learning Work? Like a Brain!

Machine learning model is an algorithm used to build visual AI solutions. This article explains the basics using analogy to human brain.

Human Analogy to Describe Machine Learning in Image Classification

I could point to dozens of articles about machine learning and convolutional neural networks. Every article describes different details. Sometimes too many details are mentioned, so I decided to write my own article using the parallel of machine learning and the human brain. I will not touch any mathematics or deep learning details. The goal is to stay simple and help people experimenting with Ximilar to meet their goals.

Introduction

Machine learning provides computers with the ability to learn without being explicitly programmed.

For images: We want something that can look at a set of images and remember the patterns. When we expose a new image to our smart “model” it will “guess” what is on the image. That’s how people learn!

I mentioned two important words:

- Model — is what we call machine learning algorithms. It is not coded anymore (if green, then grass). It is a structure that can learn and generalize (small, rounded, green is apple).

- Guess — we are not in a binary world. Now, we moved into the probability domain. We receive a likelihood of an image to be an apple.

Deeper But Still Simple

A model is like a child’s brain. You show it an apple to a kid and say, “This is an apple”. Repeating it 20 times, a connection in its brain is established and it can now recognize apples. What is important at the beginning, it can not differentiate small details. The small ball in your hand is an apple because it follows the same pattern (small, rounded, green).

The set of images shown to the kid is called the training dataset.

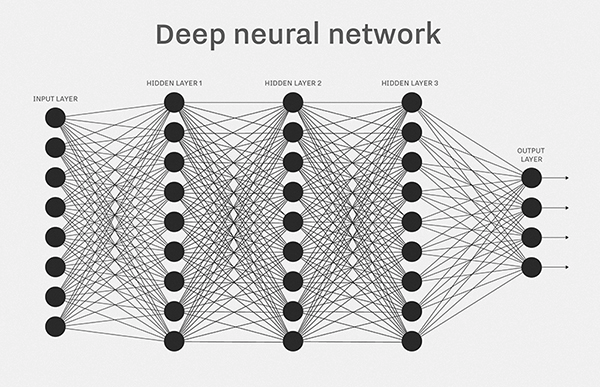

The brain is a model, and it can recognize only categories from image datasets. It is made of layers and connections. This makes it similar to our brain structure. Different parts of the network are learning different abstract patterns.

Supervised learning means we have to say “This is an apple” and add visual information to it. We are adding a label to each image.

Simple deep learning network

Evaluation – Model Accuracy

In human terms, this is like exam time. At school, we learn a lot of information and general concepts. To understand how much we actually know, the teacher prepares a set of questions we have not seen in study books. Then we evaluate our brain and we know 9 of 10 questions are answered right.

Teachers’ questions are what we call the testing dataset. It is usually parted from the training dataset before training (20% of provided pictures in our case).

Accuracy is the number of images we answer right (in percent). What is important: we do not care how sure he is about his answer. We only care about the final answers.

Limits of Computers

Why don’t we have computers with human-level skills yet? Because the brain is the most powerful computer. It has amazing processing power, huge memory and some magical sauce we don’t even understand.

Our computer models are limited in memory and computational power. We are fine with storage memory but short with superfast memory accessible by processors. Power is limited by heat, technology, price etc.

Bigger models can hold more information but take longer to train. This makes the AI development in 2017 focus on:

- making the models smaller,

- less computationally intensive,

- able to learn more information.

Connection to Custom Image Recognition

This technology is what drives our custom image classification API. People can build an image recognizer without deep knowledge in a few minutes. Sometimes clients ask me if we can recognize 10,000 categories, having one training image of each. Imagine the kid’s brain learning this. It is nearly impossible. The idea is, that the more categories you want your child to know, the more images it has to see. It takes ages for our brains to develop and understand the world. Same as the child starts with basic objects, and starts with basic categories.

What the child is confident about is good/bad. Teaching models to differentiate good from bad is very accurate and does not need many images.

Summary

I tried to simplify machine learning to a visual task only and compare it with something we all know. In Ximilar we often think of the human brain while experimenting with new models and processing pipelines. I will be happy to hear some feedback from you.

This article was originally published by David Rajnoch.

David Novák

Computer Vision Expert & Founder

David founded Ximilar after more than ten years of academic research. He wanted to build smart AI products not only for the corporate sphere, but especially for medium to small businesses. He has extensive experience in both computer vision research and its practical applications.

Tags & Themes

Related Articles

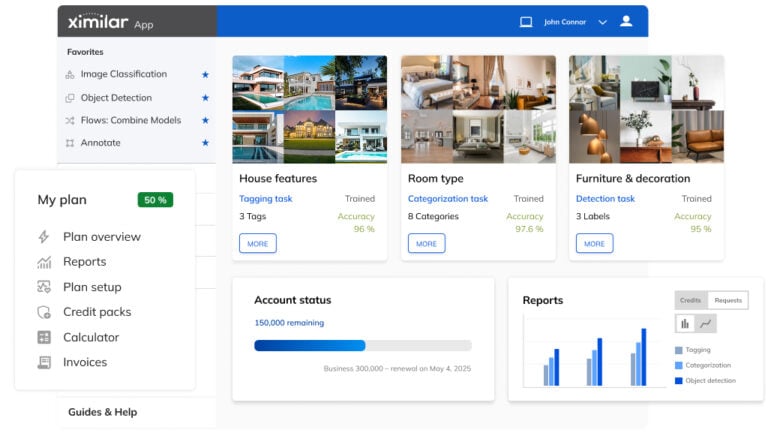

Getting Started with Ximilar App: Plan Setup & API Access

Ximilar App is a way to access computer vision solutions without coding and to gain your own authentication key to use them via API.

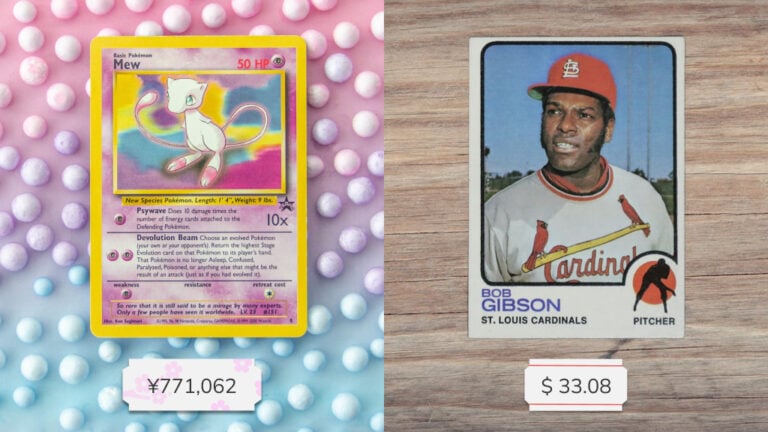

Get an AI-Powered Trading Card Price Checker via API

Our AI price guide can be used for value tracking of cards and comic books, offering accurate pricing data and their history.

Identify Comic Books & Manga Via Online API

Get your own AI-powered comics and manga image recognition and search tool, accessible through REST API.