Behind The Scene: Ximilar and Intel AI Builders Program

Becoming a partner with Intel AI helps us with scaling our SaaS platform for image recognition and visual search.

When Ximilar was founded, many crucial decisions needed to be made. One of them was where to run our software. After careful consideration, our first servers were bought and subsequently, all our services have run on our own hardware.

This brought of course a great number of challenges, but also many opportunities. We would like to share with you some of the latest accomplishments. Mainly, we are proud to announce that we have become a part of Intel AI Builders Program. Having direct access to technical resources and support from Intel helps us in using our hardware in the best way possible.

Running the entire MLaaS (Machine learning as a service) platform on our cloud infrastructure as efficiently as possible and delivering high-quality results is essential for us.

Making The Most of It

Knowing our servers enables us to optimize used models to be very efficient. It is not hard to get incredible amounts of computational power today, but we still believe that it is worth using them to their fullest potential. We love saving energy, and resources, and at the same time providing our services for reasonable prices.

Machine learning computations are performed both by GPUs (graphics processing units) and CPUs (central processing units). During training, the CPU prepares the images, and the GPU optimizes the artificial neural network. For prediction, both of them can be used. But generally, we use GPU only when the model is huge, or we need the results very fast. In other cases, the CPU is sufficient. Recently, we focused on optimizing our prediction on our Intel Xeon CPUs to make them run faster.

We use the TensorFlow library for your machine-learning models. It was developed by Google and released as open-source in 2015. Google also came up with their own hardware for machine learning – Tensor Processing Units, TPUs in short. Nevertheless, they are still interested in other hardware platforms as well, and they provide XNNPACK, an optimized library of floating-point neural network inference operators.

OpenVINO Toolkit

However, we achieved the best results by using the OpenVINO Toolkit from Intel. It consists of two basic parts. A model optimizer, which is run after a model is trained, and an inference engine for predictions. We are able to speed up some of our tasks by 5 times. The predictions run especially efficiently on larger images and larger batches.

So far, we are using OpenVINO for our image recognition service and various recognition tasks in different fashion services. For example, fashion tagging or visual search runs many models on a single image and every improvement in efficiency is even more noticeable. But we are not forgetting the rest of our portfolio, and we are working hard to extend it to all the different services we provide.

Last but not least, we would like to thank Intel for their support, and we are looking forward to our cooperation in the next years!

Do you want to know more? Read a blog on the Intel AI website.

Libor Vanek

Libor is an skilled machine learning developer with extensive experience in the fields of artificial intelligence and computer vision. He helped us build many innovative solutions and moved on to more specialized projects in OCR and LLM.

Tags & Themes

Related Articles

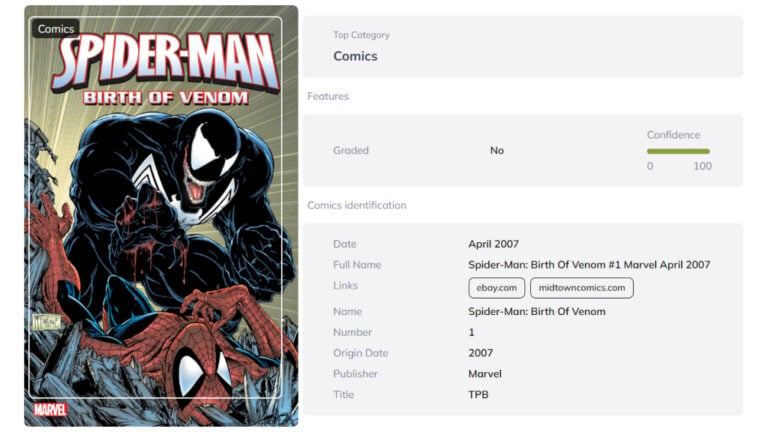

Identify Comic Books & Manga Via Online API

Get your own AI-powered comics and manga image recognition and search tool, accessible through REST API.

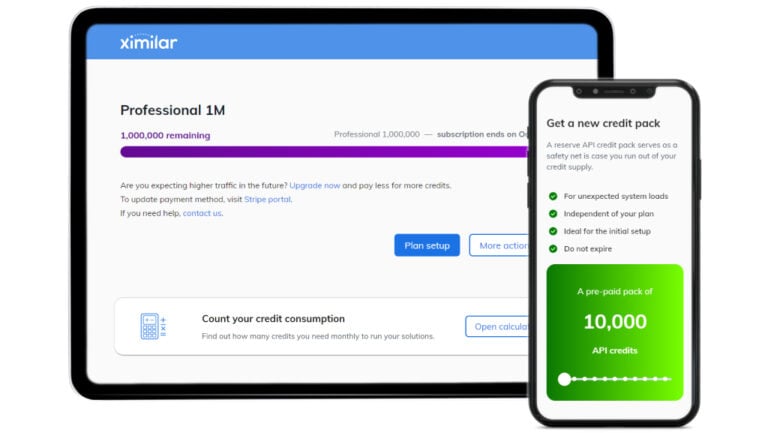

We Introduce Plan Overview & Advanced Plan Setup

Explore new features in our Ximilar App: streamlined Plan overview & Setup, Credit calculator, and API Credit pack pages.

New AI Solutions for Card & Comic Book Collectors

Discover the latest AI tools for comic book and trading card identification, including slab label reading and automated metadata extraction.