Flows – The Game Changer for Next-Generation AI Systems

Flows is a service for combining machine learning models for image recognition, object detection and other AI services into API.

We have spent thousands of man-hours on this challenging subject. Gallons of coffee later, we introduced a service that might change how you work with data in Machine Learning & AI. We named this solution Flows. It enables simple and intuitive chaining and combining of machine learning models. This simple idea speeds up the workflow of setting up complex computer vision systems and brings unseen scalability to machine learning solutions.

We are here to offer a lot more than just training models, as common AI companies do. Our purpose is not to develop AGI (artificial general intelligence), which is going to take over the world, but easy-to-use AI solutions, that can revolutionize many areas of both business and daily life. So, let’s dive into the possibilities of flows in this 2021 update of one of our most-viewed articles.

Flows: Visual AI Setup Cannot Get Much Easier

In general, at our platform, you can break your machine learning problem down into smaller, separate parts (recognition, detection, and other machine learning models called tasks) and then easily chain & combine these tasks with Flows to achieve the full complexity and hierarchical classification of a visual AI solution.

A typical simple use case is conditional image processing. For instance, the first recognition task filters out non-valid images, then the next one decides a category of the image and, according to the result, other tasks recognize specific features for a given category.

Flows allow your team to review and change datasets of all complexity levels fast and without any trouble. It doesn’t matter whether your model uses three simple categories (e.g. cats, dogs, and guinea pigs) or works with an enormous and complex hierarchy with exceptions, special conditions, and interdependencies.

It also enables you to review the whole dataset structure, analyze, and, if necessary, change its logic due to modularity. With a few clicks, you can add new labels or models (tasks), change their chaining, change the names of the output fields, etc. Neat? More than that!

Think of Flows as Zapier or IFTTT in AI. With flows, you simply connect machine learning models, and review the structure anytime you need.

Define a Flow With a Few Clicks

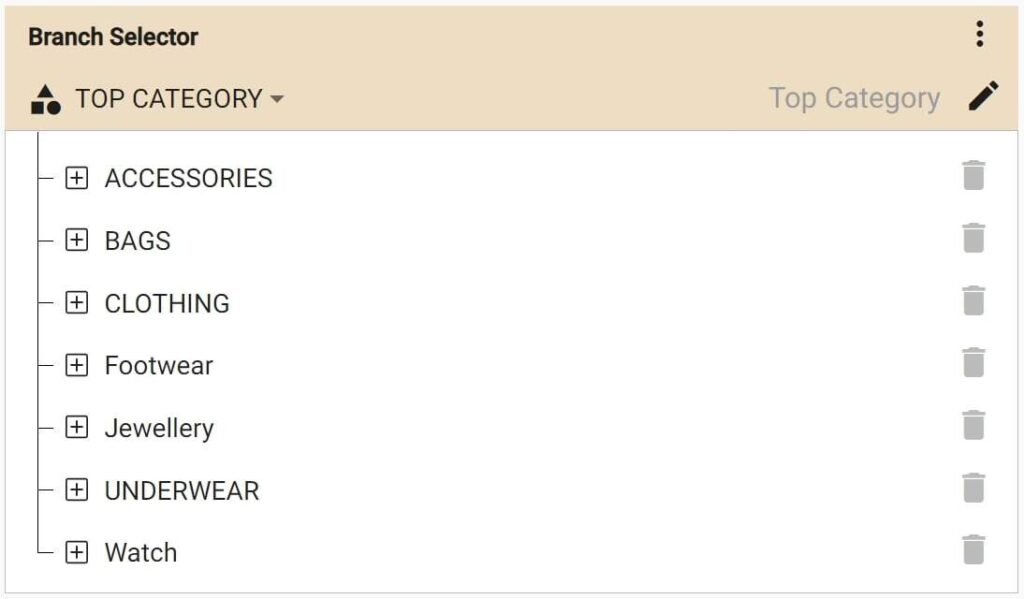

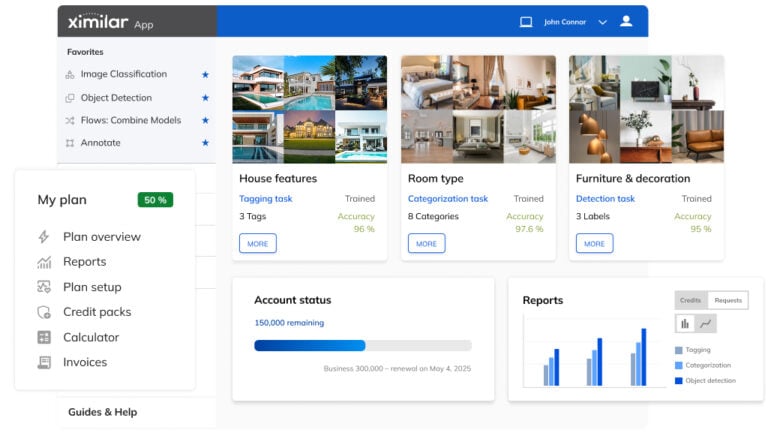

Let’s assume we are building a real estate website, and we want to automatically recognize different features that we can see in the photos. Different kinds of apartments and houses have various recognizable features. Here is how we can define this workflow using recognition flows (we trained each model with a custom image recognition service):

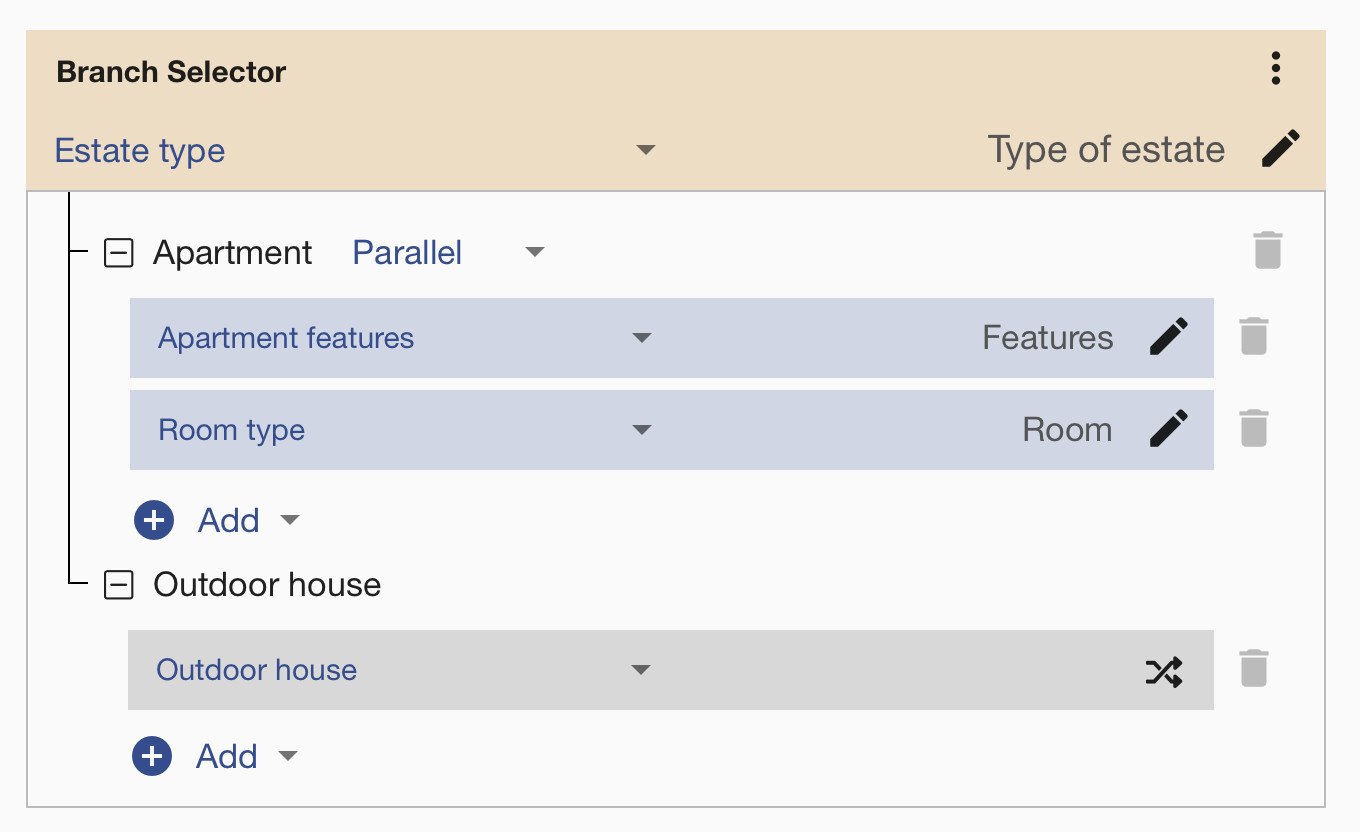

The image recognition models are chained in a “main” flow called the branch selector. The branch selector saves the result in the same way as a recognition task node and also chooses an action based on the result of this task. First, we let the top category task recognize the type of estate (Apartment vs. Outdoor house). If it is an apartment, we can see that two subsequent tasks are “Apartment features” and “Room type”.

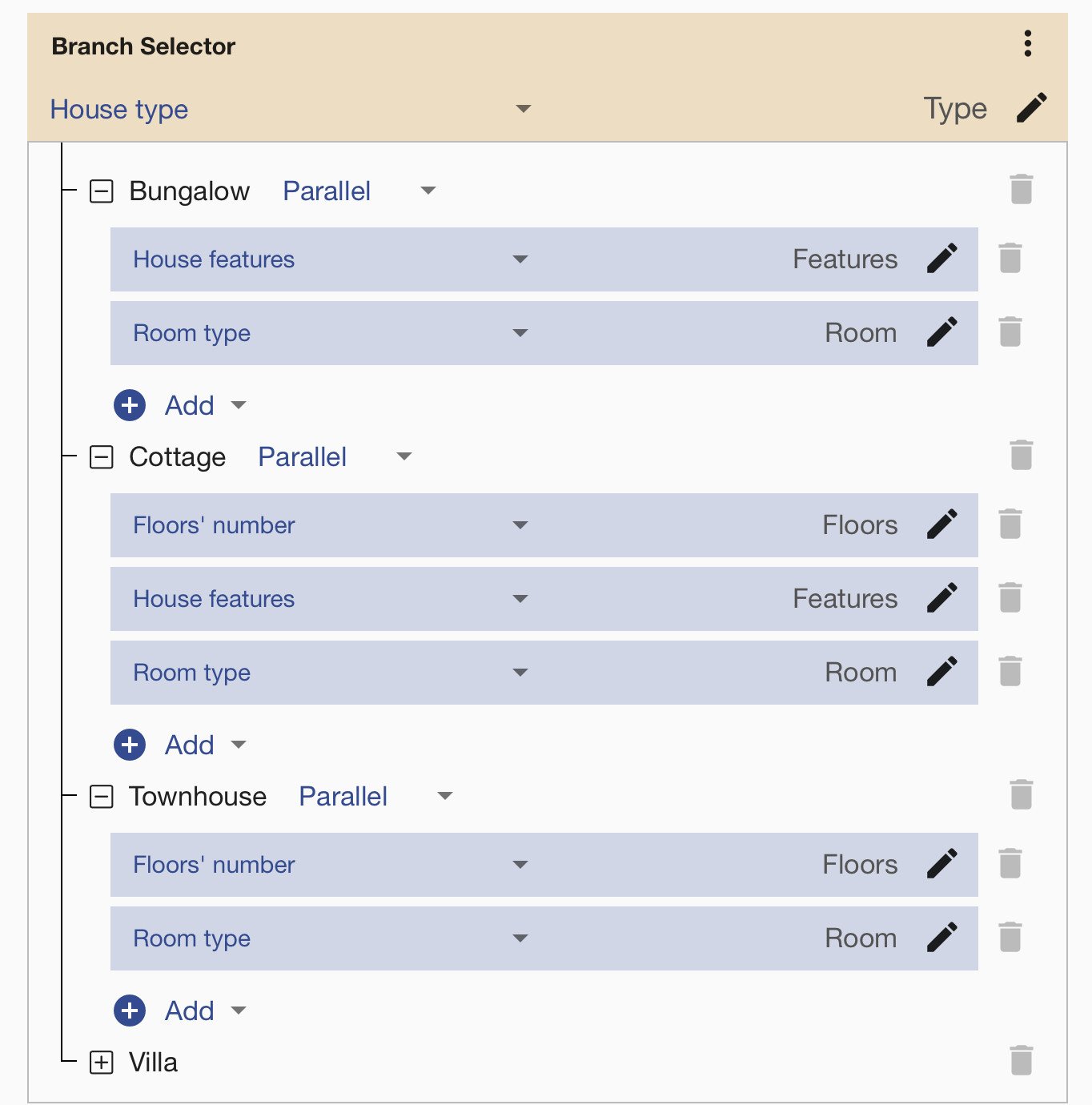

A flow can also call other flows, so-called nested flows, and delegate part of the work to them. If the image is an outdoor house, we continue processing by another nested flow called “Outdoor house”. In this flow, we can see another branching according to the task that recognizes “House type”. Different tasks are called for individual categories (Bungalow, Cottage, etc.):

Flow Elements We Used

So far, we have used three elements:

- A recognition task, that simply calls a given task and saves the result into an output field with a specified name. No other logic is involved.

- A branch selector, on the other hand, saves the result in the same way as a recognition task node, but then it chooses an action based on the result of this task.

- Nested flow, another flow of tasks, that the “main” flow (branch selector) called.

Implicitly, there is also a List element present in some branches. We do not need to create it, because as soon as we add two or more elements to a single branch, a list generates in the background. All nodes in a list are normally executed in parallel, but you can also set sequential execution. In this case, the reordering button will appear.

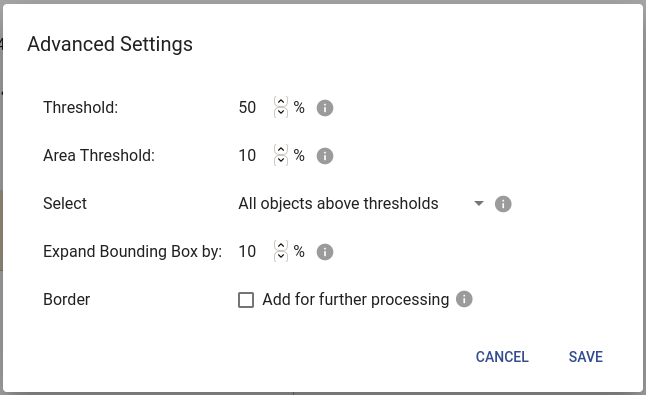

Branch Selector – Advanced Settings

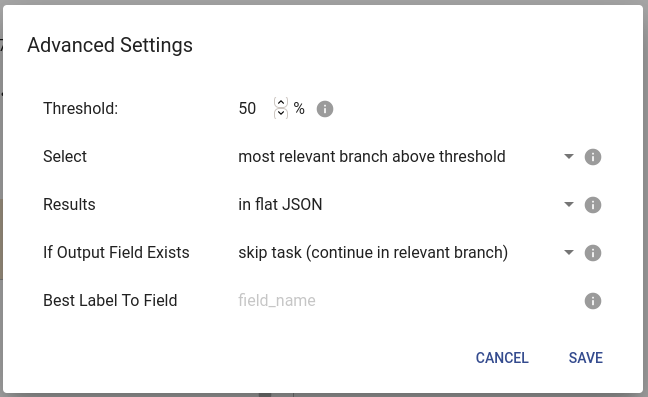

The branch selector is a powerful element. It’s worthwhile to explore what it can do. Let’s go through the most important options. In a single branch, by default, only actions (tag or category) with the highest relevance will be performed, provided the relevance (the probability outputted by the model) is above 50 %. But we can change this in advanced settings. We can specify the threshold value and also enable parallel execution of multiple branches!

You can specify the format of the results. Flat JSON means that results from all branches will be saved on the same level as any previous outcomes. And if there are two same output names in multiple branches, they can be overwritten. The parallel execution guarantees neither order nor results. You can prevent this from happening by selecting nested JSON, which will save the results from each branch under a separate key, based on the branch name (that is the tag/category name).

If some data (output_field) are present in the incoming request, we can skip calling the branch selector processing. You can define this in If Output Field Exists. This way we can save credits and also improve the precision of the system. I will show you how useful this behaviour can be in the next paragraphs. To learn about the advanced options of training, check this article.

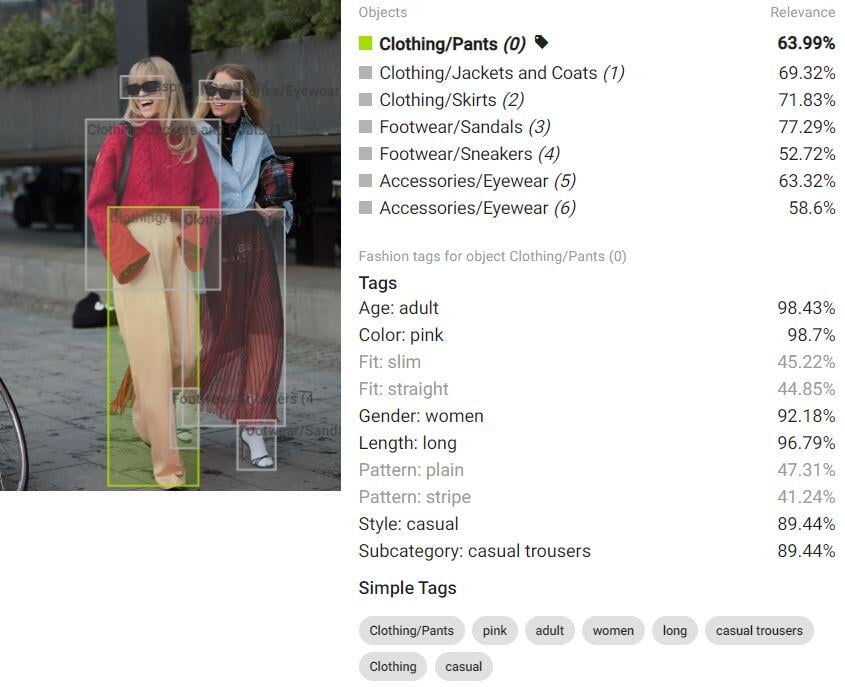

An Example: Fashion Detection With Tags

We have just created a flow to tag simple and basic pictures. That is cool. But can we really use it in real-life applications? Probably not. The reason is, in most pictures, there is usually more than one clothing item. So how are we going to automate the tagging of more complex pictures? The answer is simple: we can integrate object detection into flows and combine it with recognition & tagging models!

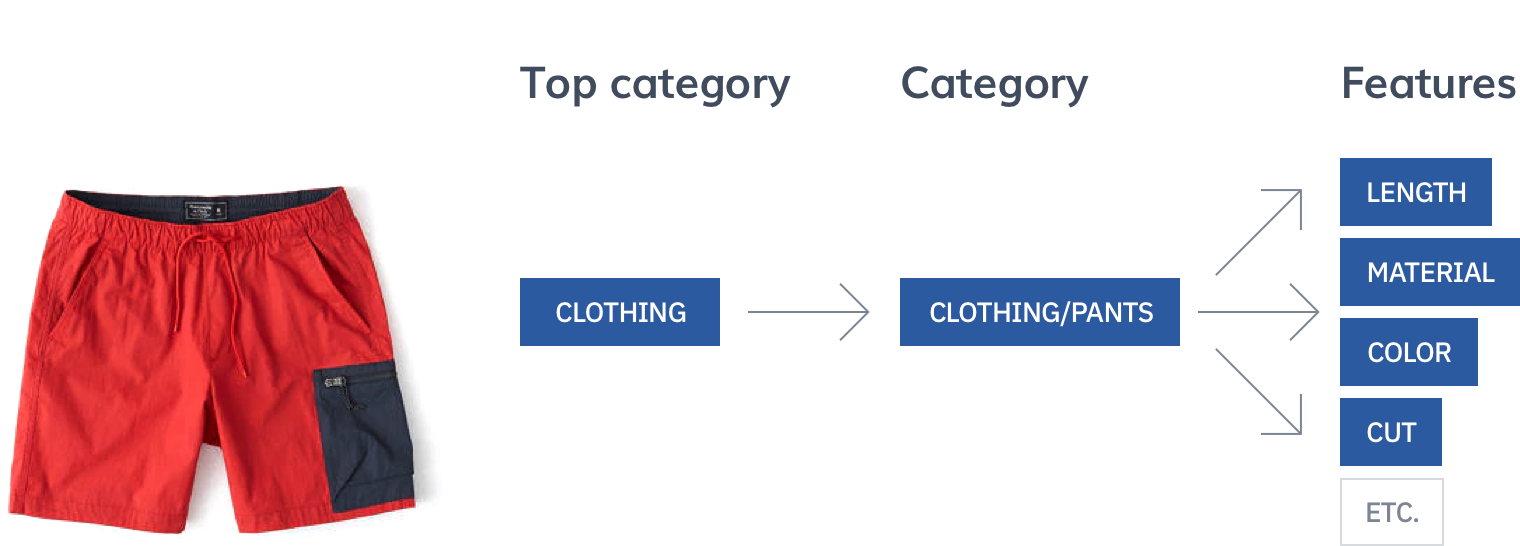

The flow structure then exactly mirrors the rich product taxonomy. Each image goes through a taxonomy tree in order to get proper tags. This is our “top classifier” – a flow that can tell one of our seven top categories of a fashion product image, which will determine how the image will be further classified. For instance, if it is a “Clothing” product, the image continues to “Clothing tagging” flow.

Similar to categorization or tagging, there are two basic nodes for object detection: the Detection Task for simple execution of a given task and Object Selector, which enables the processing of the detected objects.

Object Selector will call the object detection task. The detected objects will be extracted out of the image and passed further to any of the available nodes. Yes, any of them! Even another Object Selector, if, for example, you need to first detect people and then detect clothes on each person separately.

Object Selector – Advanced Settings

Object Selector behavior can be customized in similar ways as a Branch Selector. In addition to the Probability Threshold, there is also an Area Threshold. By default, all objects are processed. By setting this threshold, the objects that do not take at least a given percentage of an image are simply ignored. This can be changed to a single object by probability or area in Select. As I mentioned, we extract the object before further processing. We can extend it a bit to include some context using Expand Bounding Box by…

A Typical Flows Application: Fashion Tagging

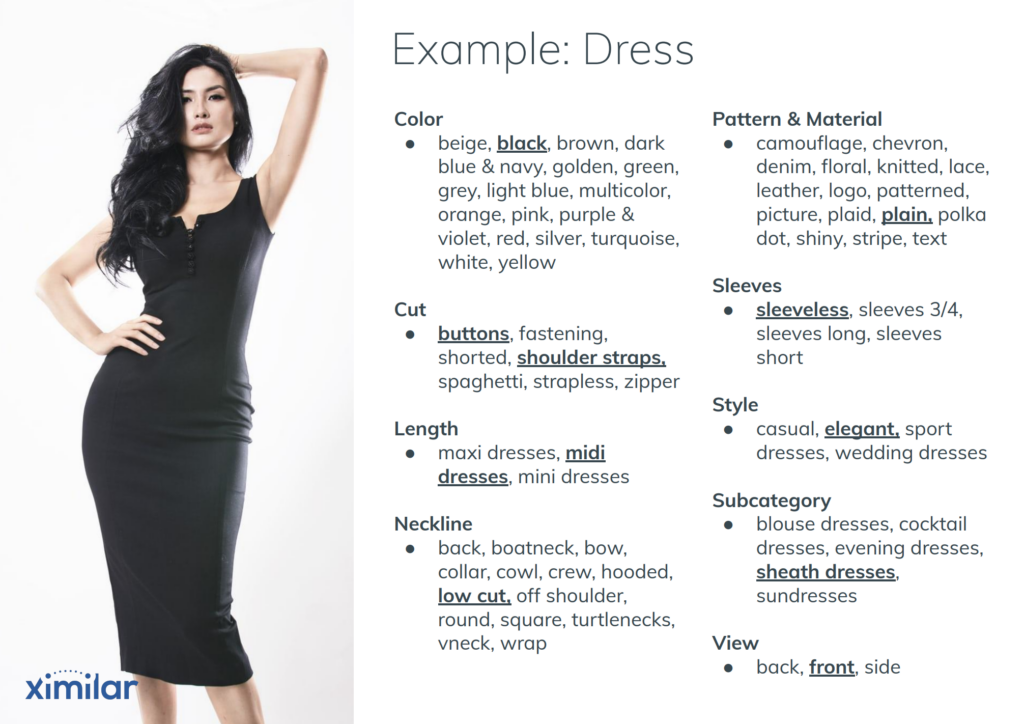

We have been playing with the fashion subject since the inception of Ximilar. It is the most challenging and also the most promising one. We have created all kinds of tools and helpers for the fashion industry, namely Fashion Tagging, specialized Fashion Search, or Annotate. We are proud to have a very precise automatic fashion tagging service with a rich fashion taxonomy.

And, of course, Fashion Tagging is internally powered by Flows. It is a huge project with several dozens of features to recognize, about a hundred recognition tasks, and hundreds of labels all chained into several interconnected flows. For example, this is what our AI says about a simple dress now – and you can try it on your picture in the public demo.

Include Pre-trained Services In Your Flow

The last group of nodes at your disposal are Ximilar services. We are working hard and on an ever-growing number of ready-to-use services which can be called through our API and integrated into your project. It is natural for our users to combine more AI services, and flows make it easier than ever. At this moment, you can call these ready-to-use recognition services:

But more will come in the future, for example, Remove Background.

Increasing Possibilities of Flows

As our app and list of services grow, so do the flows. There are two features we are currently looking forward to. We are already building custom similarity models for our customers. As soon as they are ready, they will be available for combining in flows. And there is one more item very high on our list, which is predicting numeric values. Regression, in machine learning terms. Stay tuned for more exciting news!

Create Your Flow – It’s Free

Before Flows, setting up the AI Vision process was a tedious task for a skilled developer. Now everyone can set up, manage and alter steps on their own. In a comprehensive, visual way. Being able to optimize the process quickly, getting a faster response, losing less time and expenses, and delivering higher quality to customers.

And what’s the best part? Flows are available to the users of Ximilar’s free plan, so you can try them right away. Register or sign up to the Ximilar App and enter Flows service at the Dashboard. If you want to learn the basics first, check out our video tutorials. Then you can connect tasks and labels defined in your own Image Recognition.

Training of machine learning models is free with Ximilar, you are only paying for API calls for recognition. Read more about API calls or API credit packs. We strongly believe you will love Flows as much as we enjoyed bringing them to life. And if you feel like there is a feature missing, or if you prefer a custom-made solution, feel free to contact us!

Tags & Themes

Related Articles

Getting Started with Ximilar App: Plan Setup & API Access

Ximilar App is a way to access computer vision solutions without coding and to gain your own authentication key to use them via API.

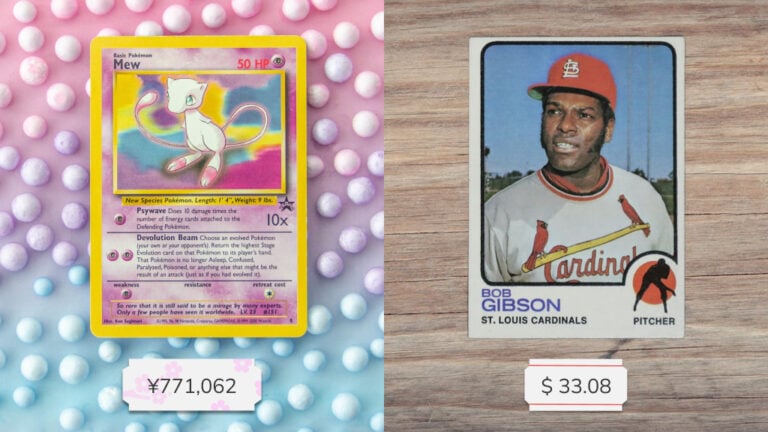

Get an AI-Powered Trading Card Price Checker via API

Our AI price guide can be used for value tracking of cards and comic books, offering accurate pricing data and their history.

Identify Comic Books & Manga Via Online API

Get your own AI-powered comics and manga image recognition and search tool, accessible through REST API.